WP6 (formerly T1, now T2) is currently investigating how European NRENs support their user communities in making optimal use of their networks for large scale data transfers, including the use of Data Transfer Nodes (DTNs).

It would be very useful for us to get your feedback regarding your current practices and your interest to engage further with WP6 T1 on this topic (gn4-3-wp6-dtn@lists.geant.org). Your feedback will be taken in consideration to redirect our future work.

GÉANT NREN Survey results

Survey presentation:

- STF meeting, 22-23 Oct. 2019, Copenhagen (.pdf)

Questions:

Q1. Which (research) communities are you aware of that are moving large volumes of data across your network?

(Answered: 21 Skipped: 9)

- Filesender service is supported in most NRENs

- AENEAS project (https://www.aeneas2020.eu/)

- Federated European Science Data Center (ESDC) to support the astronomical community in achieving the scientific goals of the Square

- Kilometer Array (SKA)

- Process (https://www.process-project.eu/)

- Creating data applications for collaborative research (Exascale learning on medical image data, SKA/LOFAR, Ancillary pricing for airline revenue management, Agricultural Analysis based on Copernicus data)

- Fiona Pacific data exchange PRP (https://prp.ucsd.edu/); Petascale DTN Project

- Effort to improve data transfer performance between the DOE ASCR HPC facilities at ANL, LBNL, ORNL, NCSA 4.4TB of cosmology simulation data

Q2. Are there any success stories regarding data transfer that you can report? What difficulties have you experienced?

(Answered: 17 Skipped: 13)

Most NRENs are doing small scale research projects regarding DTN deployment:

- Success stories (1): OFAR, E-VLBI and HEP (NIKHEF); For LOFAR, the input rate is 250 Gbit/s, of which about 80 Gbit/s are international - Surfnet

- E-VLBI experiments are supported with 30 Gbit/s to JIVE and for HEP 100 Gbit/s is provisioned to NIKHEF.

- Success stories (2): Fast data transfer for Cancer research - IUCC (article link)

Success stories (3): Moving ESA traffic from peering with Deutsche Telecom to DFN/Geant-Path - ACOnet

Success stories (4): 100Gbit/s data transfer between Imperial College using Globus Connect endpoint - Janet

Success stories (5): LCHONE, LOFAR, HLC data transfer transfer data trails by 5 NRENs

The major difficulties:

Connecting the computers and internal lab network to the NREN infrastructure

Tuning of network parameters in the campus/LAN

Not using Science DMZ concept

Q3. What problems do your communities report to you around data transfers?

(Answered: 19 Skipped: 11)

- Performance and NOC issues

- Firewall problems, DMZ

- Local Campus issues (last mile)

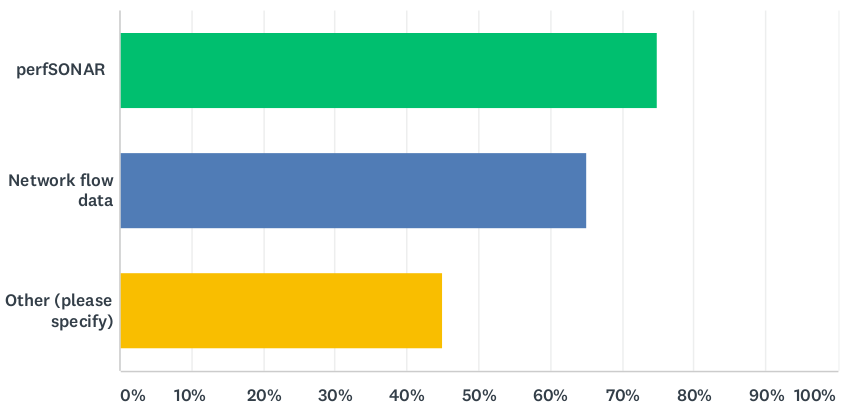

Q4. Which tools or methods do you use to measure the data transfers and the data rates or the performance that can be achieved?

(Answered: 21 Skipped: 9)

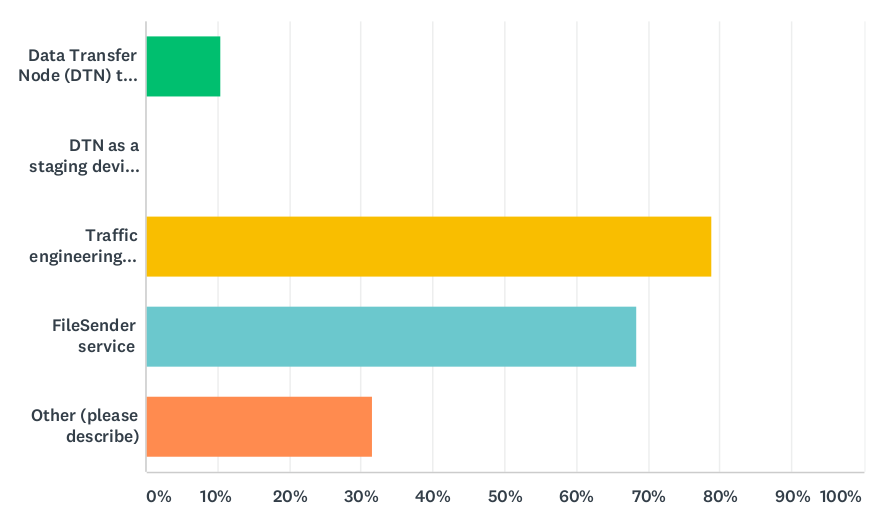

Q5. What services do you provide to your users to help them move data across your network?

(Answered: 20 Skipped: 10)

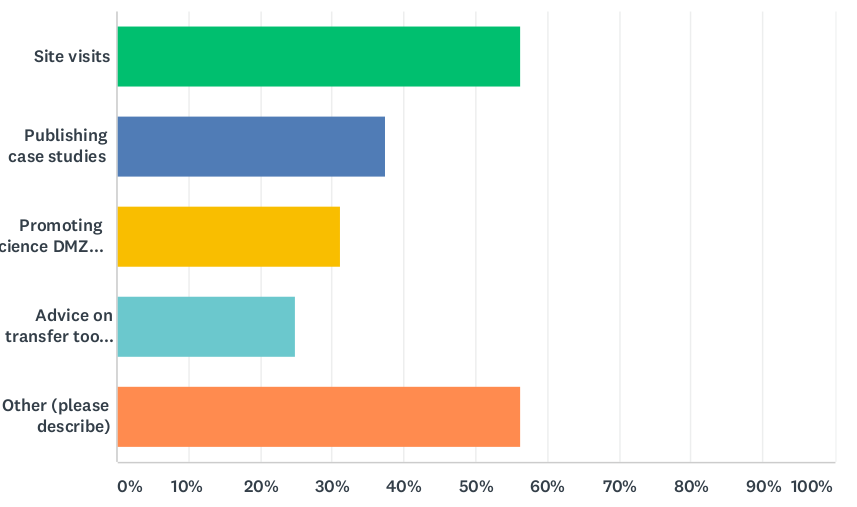

Q6. Do you provide any other forms of assistance to your users to help them achieve optimal data transfers across your network?

(Answered: 17 Skipped: 13)

- Other: Globus online

Q7. Do you collaborate on large scale data transfers with international organisations and NRENs? If so, with whom and in what way?

(Answered: 17 Skipped: 13)

- The NRENS who collaborate with international organisations work on projects: WLGC, LHCONE, PRACE, HEP

Q.8. Would you be willing to work with us (GN4-3 WP6) on improving data transfer infrastructures for the community?

(Answered: 19 Skipped: 11)

Q.9. Additional comments related to data transfer that you could not express in the previous questions

(Answered: 4 Skipped: 26)

- DTNs needs standardization of inter domain protocols. More experimenting internationally is needed.

- The use of DTN and DMZ is not considered a solution for large data transfer in an environment in which capacity is lower than 100Gb for the end user access. In case capacity is not restricted, a set of tools is already available.

- Usually the bottleneck is in users’ local network premises and in users’ applications.

- Note also that some data cannot be moved from certified sites (biomedical).

- As a general comment, the concept of high rate data transfer between nodes needs more dissemination and explanation (end node tuning, long fat pipes, etc).

- We would like to know the best practices so that we follow them, so would like to know the results of this GN4 WP6 workgroup.